Table Of Contents

I get asked a lot which cloud provider I prefer, even by people that know me well, and the answer I give lately really surprises them I think. My answer is: a combination of all of them and colocated environments. I think when it comes to the major players in the cloud world namely GCP, Azure and AWS, most of the offerings are pretty much on-par with each other. The preference people have really comes from the trust in the company’s management of the environment, price, friendliness and familiarity of interface; and clear visibility into what’s going on. Well sure, but that’s only good as long as you’re only using one, but in many enterprises, there are really good reasons to use a combination of cloud providers, combined with on-premise and colocated hardware. Some of these reasons include risk of availability in extreme cases of global outages from a single provider (and there are examples of this in the past,) and others include specific niche offerings that are only offered by one provider and only used by a specific department. Let’s take a look at tools some of the top technology companies use to manage their cloud-hybrid environment.

The Old and Obsolete IT Department

The IT department was created in the dawn of the age of technology, and it served businesses well back then. Corporations were formed by a handful of really smart individuals, which took the roles of President or CEO, and set the vision of the overall company. This person then hired the core team of business leaders: Chief Technical Officer, Chief Financial Officer, Chief Operations Officer, Chief Marketing Officer, Senior Vice President of Sales, General Counsel and so on. The most critical for roles that drove business were of course the heads of the Sales and Marketing departments, driving the CEO’s vision. They did the market research, identified revenue streams and new markets, and set the company’s heading. This means they chose who the target audience of their products were going to be, and which products they would invest in and develop.

The CTO was the facilitator when it came to technology; he was present at the table in those discussions, and made known what was technically feasible and what the cost of the investment would likely be. Thus, the IT department was born. It’s mission: to bring together some of the smartest and most technical people the company could find, put them all in one room, and drive them to forge relationships with the rest of the business departments to facilitate any technological needs the company had.

When software came along, that was a new thing as well, and now companies had to figure out if that was still IT or something else. And most of the time, it became “application teams”, which also reported to the CTO for the most part. IT then was networking, hardware and virtualization environments, corporate office and printer management, managing the organization’s corporate directory and email servers, and so on. Application teams then would focus on development of software the company needed; and once the software is ready, they would package it up, write instructions on how to deploy it, and hand it off to IT to install and maintain it. The IT department now of course also took on the role of deploying this software and maintaining it in production, at least from an operational standpoint. Next, in the 90s, we have security starting to become a concern, and again the heroes of IT step in, and become the champions of yet another thing.

Fast forward a few decades, and we have IT basically either have an incredible budget, with lots of granular procedures and sub-teams focused on each of the above areas or more; or we have a small group of cross-functional under-budgeted team doing their best to keep things running. In either case one thing is obvious: there are inevitable delays when something crosses boundaries.

This type of organizational structure of course is also what led to the adoption of the waterfall model in software development. From a CEO’s perspective, you ended up having the smartest people at the top, the Marketing and Sales leads, which would set the vision and delegate down to directors of divisions, which would break things down more and delegate to research teams and project managers, which would then break the problem into byte-size tasks, which would be handed down to programmers to turn into machine logic/code.

Peppard Joseph’s paper titled The Metamorphosis of the IT Unit is worth reading for a deeper analysis https://mitsloan.mit.edu/ideas-made-to-matter/overdue-a-new-organizing-model-it

Weaving Tech-Enablement Into the Fabric of the Broader Enterprise

The problem today is not that of lack of skills, it’s rarely ever been that. There are a lot more talented people on the planet than one would think looking at first glance. The problem I think with many companies that are struggling, is that of organization. Looking at today’s top tech companies, I can’t imagine Google having an “IT department”, I don’t think anyone can, it wouldn’t make any sense. Then we see companies like Github that for the longest time didn’t even have managers, let alone an IT department. Most modern tech companies in fact do not have a traditional structure, because they wouldn’t be as successful if they did.

Modern technology-enabled companies in fact are structured based on what their empirical data tells them is right for them. This starts with metrics, settings KPIs and collecting and analyzing data about both the product as much as the process used to build the product. In software we saw this starting with the ditching of the waterfall model in favor of agile models. Teams only plan a few weeks or up to a couple months ahead in terms of estimated and solid schedule, and then deliver software in cycles, collect feedback, and optimize the process to deliver a better product, with a more efficient process.

This change in software development culture is where the old organization structure and modern structure start to conflict with one-another. The marketing and sales visionaries set the mission; the agile teams collect valuable feedback from running the product; and now the people in the middle, be it “middle management”, product managers, directors and such, are faced with some pretty big challenges: what to do when data from both sides conflict with each other or priorities aren’t aligned? Before we even get that far, we have the problem of recording of data and making it visible. Who’s in charge of setting up central log aggregation, getting teams to ship their data to it, develop reports and extrapolate insights from the data? Well… having everyone do it is a solid strategy, but more on that later. Every real world story I’ve seen about this culture shift has always started with one smart person on one team, who wouldn’t accept the existing structure, and automated his job.

So if not an IT department, then what? ”Whatever the data tells you” is the strategy I’d take, test, measure, measure other stuff, become creative in the stuff you measure, and then act. In Google and many tech companies, we see this take the shape of software development teams, site reliability teams, security teams, operational performance teams, and so on, all within a DevOps culture. The development teams have complete control and responsibility of getting their site from concept to production, and supporting it once it’s there. Thy decide on what programming logic and frameworks to use, up to what geographic regions they need it in, and what resources they’ll use, there’s no waiting on requests from another department to get them the resources they need, so they can move at peak efficiency. Site reliability teams focus on the bigger picture running of infrastructure, and help teach software teams valuable insights they have from their experience, so they can build more scalable systems; they also control the pipeline, and can add tests and checks they need in order to ensure long-term success. Security teams come in and add their own tests and checks on top of those same pipelines, and ensure everything is delivered in a secure manner. Each team has its priorities, and build on top of each other, without having to wait on each other; nothing has to cross boundaries to get from concept to production, and we have a better, more scalable and secure structure because of it.

This all falls under the theme of Infrastructure-as-Code, but expands into the concept of Everything-as-Code. But not boxed infrastructure as code that lets John in IT manage everything from his laptop. Ownership of the pipeline, infrastructure, and everything that is the environment from development to production should be owned by both SRE (Site Reliability Engineers) and SWE (Software Engineering) as joint ownership, where each one builds on top of the other one.

Example in Practice

- SWE store application code in a Git repo

- SWE also store the build definition that is used to build the project, as YAML in the same Git repo

- SRE store Puppet and Terraform configs for all environments in a Git repo

- SWE inherits the production environment configs from the infrastructure repo, and adds their own lower environments to the same repo

- SRE, QA and ITSec teams add their own tests into both the build definition and infrastructure definition. This includes Terraform tests for resource expense segmentation checks, vulnerability scanning, encryption cipher, QA regression tests, performance / load tests and so on

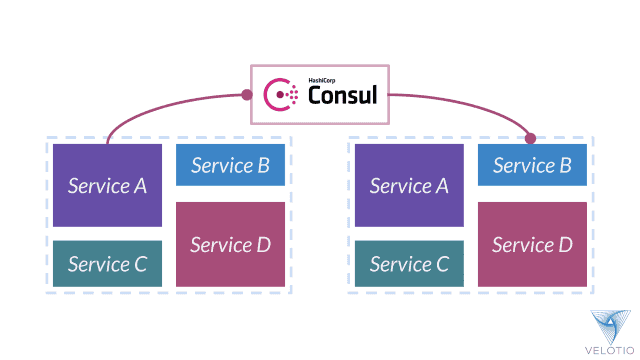

- Define Service-to-service communication and all dependencies as code using HashiCorp consul

- Make sure all changes to infrastructure code are further gated with Pull Requests, which require approval from both teams. Let everyone that has a stake, see all the configs, and everyone can contribute to the project

- Keep all encryption certificates, environment passwords, connection strings and such stored in a HashiCorp Vault. Wrapped the master key with a FIPS 140-2 Level 2 or higher HSM such as Google CloudHSM; credentials should never exist in the git repos

- SWE builds health checks into the application to tests every dependency, SRE adds their own health checks, QA and ITSec add more as needed

- Pipelines are completely automated from first push to production. Every developer on the team can basically push to production, and they push many times a day:

- SWE creates a branch, does the work, submits a pull request

- Build pipeline takes over, compiles the code, runs unit tests, runs security and infrastructure tests, spins a new environment and deploys the build to it, runs performance tests and regression tests

- Logs from every step in the pipeline are sent to a central log aggregation server and tagged with the PR number

- If any check fails, the SWE that submitted the code is pinged on Slack to be aware and fix it

- SWE team [and optionally if infrastructure was changed, SRE team] is pinged via Slack that the PR passed all the checks and is ready for review. Once all reviews are in, it goes into Master

- After it gets merged into master, it gets picked up by the next release pipeline, versioned, deployed to Staging and all necessary infrastructure, security, regression and load tests get run on it automatically

- As soon as everything passes on Staging, it gets queued up to be released to production. The production pipeline starts making any necessary changes to infrastructure and routing, and creates new containers with the new version of the code. Once the containers’ health checks are green, traffic starts routing to the new version of the application. If any production performance metrics or errors start popping up, traffic is rerouted back to the previous version of the application, and Ops/SRE teams are pinged via Slack

Modern Tools

In a world where new technologies were invented, fulfilled their purpose, and were sunset in the time it took me to write this post, it’s hard to ever come up with a comprehensive list, and that list wouldn’t be of too much value, because it all depends on what the right fit is. But here are some of the tools used by today’s top-performing companies:

Hashicorp Terraform

Terraform is a tool for building, changing, and versioning infrastructure safely and efficiently. It allows you to define your infrastructure in Hashicorp Configuration Language or Json, which can be stored in Git, in a locked branch, which forces changes to only be accepted using Pull Requests, with quality gates, security gates and cost gates built-in, to ensure each team’s concerns are met, while not getting in the way of the release. It also includes Execution Plans, which lets teams see the likely impact of any changes, which reduces the likelihood of accidental unintended secondary changes.

Check out HashiCorp’s site for a comprehensive introduction: https://www.terraform.io/intro/index.html

https://www.hashicorp.com/resources/everything-as-code-with-terraform

HashiCorp Vault + Google CloudHSM

Hard-coding credentials I hope is funny to just about everyone reading this, as something nobody does anymore (or I hope they don’t), but often they just move to a config file. This is where HashiCorp Vault comes into play. It allows you to store application secrets such as application credentials, database connection strings, and other sensitive settings, in a central place, ensuring that any access to them is logged, and they are protected. The vault can also be encrypted itself, and the master key wrapped with a cloud provider’s Hardware Security Module-backed service such as Google CloudHSM. This ensures that if you dispose of a VM, then just invalidate its access key to the vault, so it can never come back online to haunt the environment. If a VM is compromised, then keys are safe or you have the opportunity to lock them down. If the vault itself somehow gets compromised, then again because it’s wrapped by a 3rd party service, no one can get into it; and finally by using an on-premise Vault, you don’t have to trust 3rd party vendors with the keys to the kingdom, or worry about latency of key access.

Puppet Server + Foreman

Foreman and Puppet are configuration management tools. Puppet Server is configured in an agent-master architecture, in which a master node controls configuration information for a fleet of managed agent nodes. It allows you to create environment configurations which define through Ruby or YAML what software should be installed, what configuration files should be on each VM, and maintain the entire configuration of an environment centrally, with versioning, change control and tests.

Foreman is a complete lifecycle management tool for physical and virtual servers. It gives system administrators the power to easily automate repetitive tasks, quickly deploy applications, and proactively manage servers, on-premise or in the cloud.

Kubernetes, Docker Compose

Containerization as a whole allows developers to package the applications along with the entire OS configuration into a well-defined package, which when deployed use OS-level virtualization. In some ways similar to VMs, they don’t put multiple concurrently-running operating systems on the same box. Instead, they virtualize within the OS, so each application has its own OS from its perspective, and all the protections that brings, but under the hood, only one OS is running. This allows for a lot more density on the same hardware, and allows the developers to bundle their own software, libraries and configuration files, via a dockerfile.

Kubernetes and docker compose are container-orchestration systems for automating application deployment, scaling, and management.

Git

Git is a free and open source distributed version control system designed to handle everything from small to very large projects with speed and efficiency. It is easy to learn and has a tiny footprint with lightning fast performance. It manages code in repositories, which can have multiple parallel branches; and those branches can enforce granular security on who has permissions to view and edit; as well as lock a branch, so changes into it can only be merged via Pull Requests, where you can add more validation checks. Using Git to manage infrastructure lets you open environment definitions to every development team, site reliability team, security team and so on, allowing each to have an up-to-date full picture of what’s relevant to them, and access to improve the pipeline.

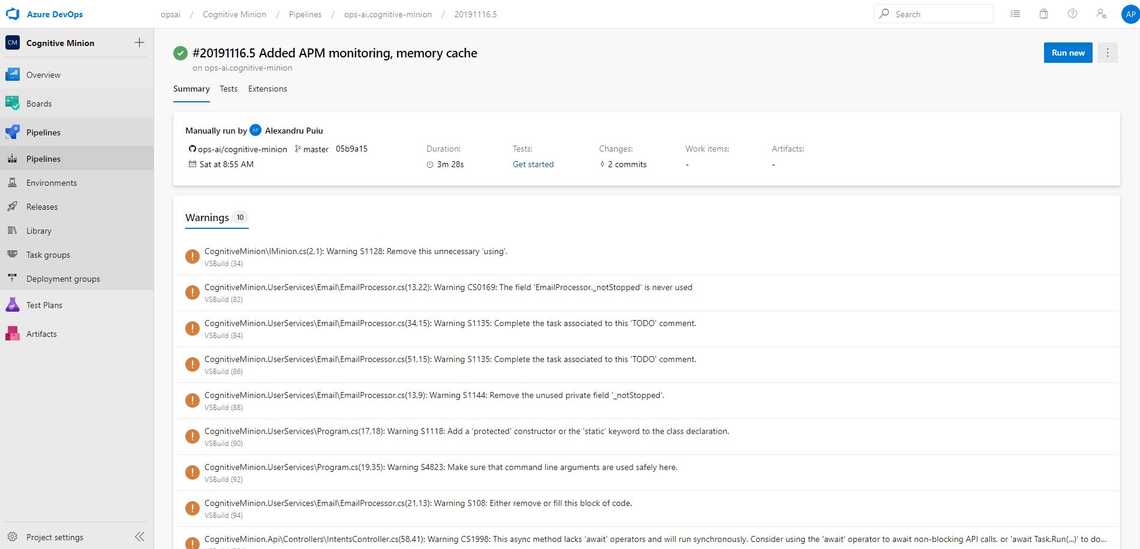

Azure DevOps Server or equivalent (Jira + Jenkins + Confluence + Octopus Deploy + TestRail)

On the software side of things, Build definition defined as YAML in Azure DevOps Server.

Azure DevOps Server is a Microsoft product that provides version control, reporting, requirements management, project management, automated builds, lab management, testing and release management capabilities. It covers the entire application lifecycle, and enables DevOps capabilities.

HashiCorp Consul

Consul is a service networking solution to connect and secure services across any runtime platform and public or private cloud. The shift from static infrastructure to dynamic infrastructure changes the approach to networking from host-based to service-based. Connectivity moves from the use of static IPs to dynamic service discovery, and security moves from static firewalls to service identity.

Cyxtera / Zero Trust

Connecting to your infrastructure is just as important for keeping safe as the resources themselves. Replacing traditional VPNs with modern zero trust solutions that are capable of making authorization decisions on a packet-by-packet basis on account of the user sitting in front of the computer and hook into your systems for extended intelligence can bring real value in productivity and risk reduction.

SonarQube

SonarQube uses language-specific analyzers and rules to scan code for mistakes, some patterns that are known to introduce security vulnerabilities, and code smells.

https://scatteredcode.net/code-quality-using-sonarqube/

Elastic Stack (ELK) + APM

Central log aggregation, Application performance monitoring, SIEM.

Sitespeed.io

Sitespeed.io is a set of Open Source tools that makes it easy to monitor and measure the performance of your web site.

Owasp Dependency Check

Dependency-Check is a software composition analysis utility that identifies project dependencies and checks if there are any known, publicly disclosed, vulnerabilities. Currently, Java and .NET are supported; additional experimental support has been added for Ruby, Node.js, Python, and limited support for C/C++ build systems

https://scatteredcode.net/installing-and-configuring-sonarqube-with-azure-devops-tfs/

Zed Attack Proxy (Zap)

The OWASP Zed Attack Proxy (ZAP) is one of the world’s most popular free security tools and is actively maintained by hundreds of international volunteers. It can help you automatically find security vulnerabilities in your web applications while you are developing and testing your applications. Its also a great tool for experienced pentesters to use for manual security testing.

Zap can act as a headless server which can run scans submitted by the release pipeline

Everything as Code

Alerts-as-Code

Datadog, Grafana, PagerDuty

To Do-as-Code

Google Calendar, G Suite, Todoist

Life-as-Code

Meetup (community-as-code), Domino’s Pizza (pizza-as-code)

Subscribe To My Newsletter

Quick Links

Legal Stuff