Table Of Contents

When an application starts to become popular, usually it’s time to rejoice, however that depends on how the exact shape of that trend and how prepared you are for it. Sometimes, it’s just a matter of getting a more powerful server, and cloud providers such as Azure have made that incredibly easy, and you can set autoscale to beef up your server when it’s needed. However, at a certain point that hits a limit, and you need to start scaling horizontally. In this post, I’ll discuss some concepts used by some of the largest applications on the planet, which are needed to create an application with high security, and availability

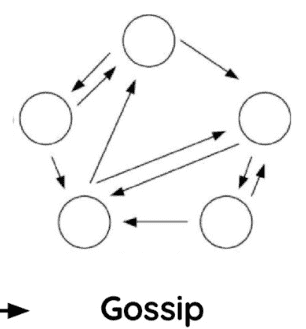

Gossip Protocols

Gossip protocols, also known as infection or epidemic protocols, are a category of protocols used for peer-to-peer communication that provide additional information about the network which they know about and accept but aren’t the authoritative source of. Some distributed systems use peer-to-peer gossip to ensure that information is efficiently disseminated to all members of a group. A really good use-case for this is network discovery and state maintenance in a very large network, where direct chatter between all nodes would waste a lot of bandwidth, and especially useful when some nodes don’t have a direct path to communicate.

Network Discovery

A few years ago I came across a problem, where I wanted to be able to join a node to a large network, but didn’t want the overhead manually reconfiguring servers to know about each other. The goal was for a node to fully join the network with as little information as possible. I came up with the following:

New server posts it’s topology, containing just itself, to any one node in the existing network that it knows about. To start the cluster, this means that one server just posts itself to another server. The topology it posts includes its address, the current time, and any capabilities it wishes to advertise.

Every X seconds, or on a change in state, each server posts its current topology along with the current time to all the nodes it knows about.

The current time, with the assumption that network communication in both directions takes the same amount of time, and assuming all servers are time-synced, allows us to efficiently determine latency between nodes.

Under this design, servers still know about each other even if they can’t reach each other, and know expected latency if they use intermediary servers to relay messages. This allows them to build a network diagram like those used in lower levels of the OSI Network Model, but while maintaining additional application-layer state such as current capabilities.

Nearest neighbor gossip or delegation

A simple yet powerful optimization of the above is to use neighbors to disseminate information. Instead of sending your information to every node, sending to the nodes with the lowest latency, or to random nodes on each update and trusting that they’ll do the same on their part quickly disseminates information in the network, while using only a tiny fraction of the typical exponential scale, it would normally use.

Scalable Weakly-consistent Infection-style Process Group Membership Protocol (SWIM)

SWIM is another example of a really great protocol used in many systems today including Hashicorp Consul. SWIM has a few additional optimizations which make it really good for large networks. For example, it relies on UDP and chooses a random node in the network to ping. If the ping is unsuccessful, it picks another random node and asks it to ping the target node. If unsuccessful, it broadcasts a message in the network that it suspects the target node to be down. This suspicion mechanism leads to very few false positives under production scenarios, and a better overall state accounting, especially since UDP packets can be lost along the way.

https://www.cs.cornell.edu/projects/Quicksilver/public_pdfs/SWIM.pdf

Infrastructure-As-Code

In a world where new technologies are invented, fulfill their purpose, and were sunset in the time it took me to write this post, it’s hard to ever come up with a comprehensive list of tools for this, and that list wouldn’t be of too much value, because it all depends on what the right fit is. The concept, however, is that the state of the infrastructure is declaratively described in versioned code, and automation is responsible for reshaping the infrastructure to meet specifications. This basically flips documentation around, and we have the documentation of the network both describe and dictate the state instead of just an accounting of the last observed state or a stale representation of a previously designed state.

Check out the my previous post where I go in depth on tools to achieve this.

https://scatteredcode.net/managing-a-multi-cloud-hybrid-environment-with-modern-devops-tools

Authentication, Authorization and Access Control

Authentication is the verification that a person or entity proves they are who they say they are.

The process of Authentication, however, is a lot more complicated than the concept. We need to make sure that the user is who they say they are, using as much information as we have available, but at the same time, we have to not overwhelm the user and be mindful of the sensitivity of the data this authentication assertion will be used for.

Typical authentication factors include username and password, a code sent to their phone using SMS or an automated voice call, pre-allowed IP address, Google Authenticator TOTP (RFC 6238) / HOTP (RFC 4226) tokens, FIDO U2F USB keys such as YubiKey. However, authentication techniques can go a lot further, leveraging browser fingerprinting, where a hash is generated by the browser based on characteristics of the browser (resolution, active plugins, features, etc), and user behavior fingerprinting such as keyboard dynamics and mouse dynamics, where the speed and timing between key presses or arcs and angles formed by moving the cursor generate an identifiable pattern for the user.

Check out my previous article on the subject for more authentication ideas

https://scatteredcode.net/authentication-ideas

OAuth2 / OpenID Connect and SAML 2.0

Authorization is the verification or assertion that the currently authenticated identity has access to a resource.

Authentication is really hard, and there are a lot of ways it can go wrong. So we want to make sure it’s done right, but that can be prohibitively expensive if we need to redo it for each application. Luckily, new protocols now allow us to do authentication in a single place and leverage all those controls from any number of applications quite simply. Protocols such as OAuth2 / OpenID Connect, and SAML 2 allow us to federate the authorization of an application to be performed by another system. Under these protocols, the user is redirected to another system that performs all the necessary authentication and authorization and provides the user with a token they can present to us as proof that they have been verified and are who they say they are.

System for Cross-Identity Management (SCIM)

A typical problem we have with distributed systems using OAuth 2, OpenID Connect, SAML, and others is that information those systems store about the users change. In the United States for example, a user’s email address might change if they get married and change their last name. This can result into issues in cross-system consistency if email address was used to map the user between systems. SCIM solves this by allowing applications to subscribe to changes in the data source. They will get a JSON POST with any a description of changes to a resource for resources matching the query they subscribed to.

Vectors of Trust

We can have requirements that extend beyond the typical allow/deny models and authorize based on dynamic context and strength of the identity of the user. Let’s say the requirement calls for the user coming in from a location they normally log in from, otherwise, they need to MFA, and we need to have a high degree of certainty that this account belongs to a real human being (ex: we need to have a driver’s license on file for them).

The system can determine that it needs the user to go back to the authorization server and come back with more info; so it challenges the info needed. Using IETF’s Vectors of Trust OpenID Connect extension (RFC 8485), we can forward the user to get another access token and communicate additional vector combinations the user must meet. Vectors, in this case, could be authentication strength (password challenge, MFA) and identity strength (verified points of identity such as passport, driver license or W3C Decentralized Identifiers / DIDs), known user location (location services, IP address), and/or device security/compliance (firewall on, recent malware scan). The authentication server can prompt the user for any additional information it needs, or involve other services in the verification.

https://www.rfc-editor.org/rfc/rfc8485

BeyondAuth Access Control

Access control consists of the checks performed on each request in order to allow or deny access to a resource. In the case of JWT tokens, we need to verify that we trust the issuer of the token, that the token is signed with a trusted key published in the JWKs of the authorization server, that the token is within its validity period, the necessary scopes are contained in it, and that the necessary authentication level was performed. Sensitive resources might require that MFA had to be performed.

In addition to the integrity and validity of the JWT token, we need to make sure it wasn’t intercepted, and we have extension protocols such as OAuth 2.0 Mutual-TLS Client Authentication and Certificate-Bound Access Tokens (RFC 8705) to help ensure the token wasn’t stolen.

We now know who the user is and that their token wasn’t stolen. Once this is all taken care of, we get to the actual checks to make sure the user should indeed have access to the resource aka the user meets the necessary criteria. This could be coarse-grained such as the user being in a particular role, fine-grained such as an Access Control List, context-based determined by the action they are performing, time-based, and could leverage more modern requirements of access such as recent-behavior based.

Some examples of requirements:

- Time-based: Access to the resource is only allowed between 9 AM-5 PM ET.

- Header Analysis/Fingerprint: Header order and user-agent string can be compared with known browser behaviors.

- Population Behavior: Similar authorization sequences/patterns performed by users over time grouped into populations. Neo4J, NSA’s lemongraph come to mind and DarkTrace comes to mind.

- Open Support Ticket: There’s an open ticket in the helpdesk, assigned to the user for working on this server/data from external integration.

- Member of a Group / Role: The user belongs to a group/role.

- Current Location: The user’s IP or reported device location places them inside a corporate office.

- Current Device: They are accessing the resource from a known, clean device as reported by an inventory and security assertion service like OpSwat MetaAccess.

- JWT Scope: A specific scope is present in the JWT token.

- Username: The requirement lists that only a specific user can access this.

- Authorization Sequence: The request passed through your firewall, then reverse proxy gateway and both reported that they let you through.

- Remote Authorization: Authorization decision pauses the request while something prompts you on your phone to allow the request.

- Time of Day: You’re typically active during this time.

- XACML Json Profile 3.0 v1.1: Custom policy defined in XACML.

Subscribe To My Newsletter

Quick Links

Legal Stuff